A while ago, I built a multi-camera switcher in Second Life, when the new CameraParameters function was added to LSL....

[link] , a little while later I updated it - with cam points the user could move, via editing the linked prims..

.[link] and then eventually I got round to writing a blog post about it.

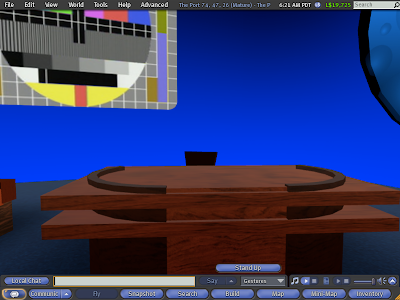

( amazing what you can achieve in 3 years ... )WHAT is the Multi-Cam Machinima Camera Switcher (MSMCS)?This tool creates instant vision cutting between 8 camera setups much like a TV Vision Mixer swtiching between multiple cameras in a studio. Using the MSMCS you can block out a range of camera positions, that when you go into production, you can jump between. This is particularly suited for when Machinima is streamed LIVE out of Second Life particularly Interviews style shows, allowing the camera operator to cut from Closeups of Avatars to a wider shot of the stage etc.

For educators, this tool can also be used to exlpore conventions of Film and Video production, particularly

Crossing the Line ( 180 degree rule ) or

Jump Cuts. As well as developing an understanding of Multi-Camera shoots, when access to Real Life equipment is limited. Also it could be used as a way of quickly developing animatics for video productions.

For Machinima Filmmakers - understand the limitations of this tool, it may be useful for some circumstances and not for others.

HOW to USE the Multi-Cam Machinima Camera Switcher. (MCMCS)

1. Build your Set, Stage, Interview Room.....

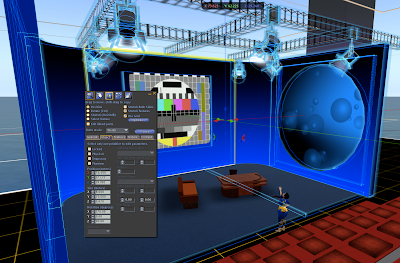

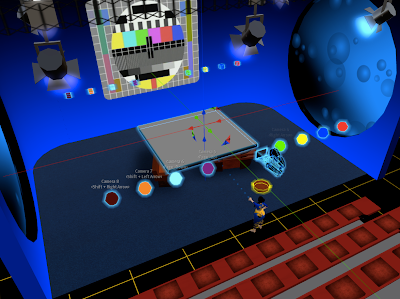

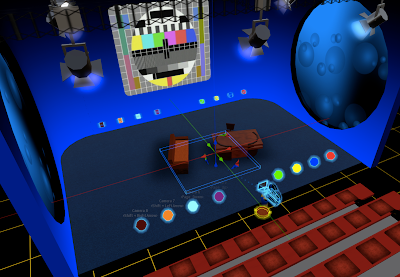

2. Rez the MCMCS - and place the big grey square so its somewhere in the middle of your set.

3. Whilst in 'Edit Mode' - move the MCMCS vertically down until the big grey block is under your set...

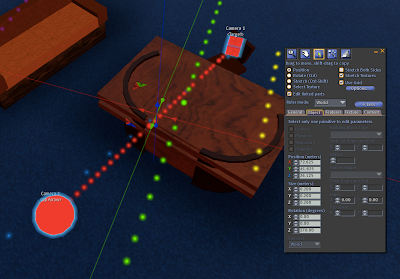

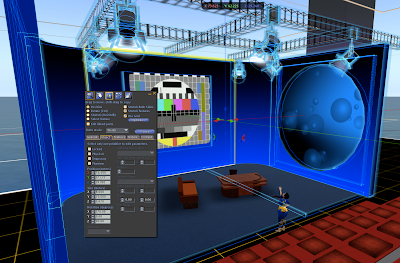

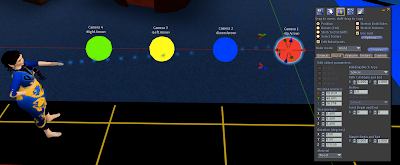

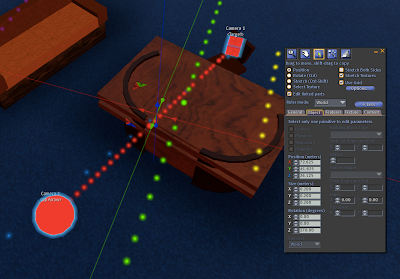

4. Now to edit the individual cameras - in edit mode panel - click on edit linked parts ---

( click on image below to enlarge it )

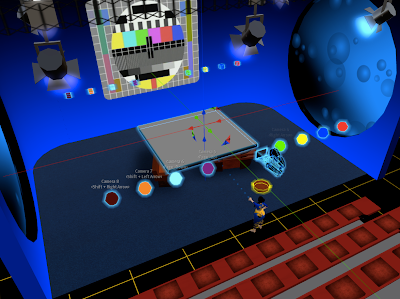

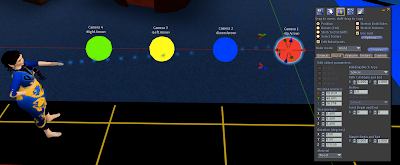

5. Each Camera is identified by Colour and settext above it... ( red is camera one ) - the sphere prim is the camera's position , the cube prim the camera's target

(ie. where you want to look). Whilst in edit linked parts mode, move the sphere and cube prims to set up the camera shot.

6. Sit on the camera to see what the shot looks like... ( sitting on the camera makes all the cam prims and particles disappear )

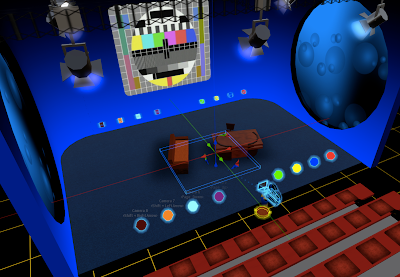

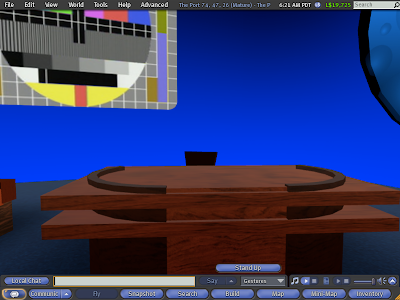

7. Camera One ( the red one we just edited ) is selected by pressing the Up Arrow, and your camera view should be updated like so...

8. Stand up your avatar , and go back to point 4, and repeat the process with all the other coloured pairs, to give you upto 8 different camera shot framings.

Each Camera Prim has floating text above them denoting the camera number and the corrosponding arrow key that needs to be pressed.

-------

If you think this is useful for you - you can pick up a copy outside my TechGrrl Store for L$ 25

http://slurl.com/secondlife/Gourdneck/197/233/67